Robots.txt

The robots.txt file is a text file that defines which parts of a domain can be crawled by a Webcrawler, and which parts can't be. In addition, the robots.txt file can include a link to the XML-sitemap. With robots.txt, individual files in a directory, complete directories, subdirectories or entire domains can be excluded from crawling. The robots-txt data is stored in the root of the domain. It is the first document that is accessed by a bot when it visits a website. The bots of the biggest search engines such as Google and Bing follow the instructions. Otherwise there is no guarantee that a bot will adhere to the robots.txt requirements.

Background

Robots.txt helps to control the crawling of search engine robots. In addition, the robots. txt file can contain a reference to the XML Sitemap to inform crawlers about the URL structure of a website. Individual subpages can also be excluded from indexing using the meta tag label robots and, for example, the value noindex.

Structure of the protocol

The so called “Robots Exclusion Standard Protocol“ was published in 1994. This protocol states that search engine-robots (also: user agent) look for a file named “robots.txt“ at first and read out its instructions before starting indexation. So a robots.txt file needs to be filed in the domain’s root-directory with this exact file name in lower case letters, as the reading of the robots-txt is case sensitive. The same applies to directories in which robots.txt is noted.

However, it should be noted that not all crawlers adhere to these rules, so robots.txt doesn’t offer any access protection. A few search engines still index the blocked pages and show these pages in the search engine results without the description text. This occurs especially with extensively linked pages. With backlinks from other websites, the Bot will notice a website without directions from robots.txt. However, the most important search engines like Google, Yahoo and Bing comply with robots.txt.

Creation and control of robots.txt

A robots.txt is easy to make with a text editor, because it can be saved and read in Plaintext-format. In addition, you can find free tools online which query the most important information for the robots.txt and create the file automatically. Robots.txt can even be created and tested with the Google Search Console.

Each file consists of two blocks. First, the creator specifies for which user agent(s) the instructions should apply. This is followed by a block with the introduction "Disallow", after which the pages to be excluded from indexing can be listed. Optionally, the second block can consist of the instruction "allow" in order to supplement this through a third block "disallow" to specify the instructions.

Before the robots. txt is uploaded to the root directory of the website, the file should always be checked for correctness. Even the smallest errors in syntax could cause the User Agent to ignore the defaults, and crawl pages that should not appear in the search engine index. To check if the robots. txt file works as it should, an analysis can be carried out in the Google Search Console under "Status" -> "Blocked URLs". [1] In the area "Crawling", a robots. txt tester is available.

Exclusion of pages from the index

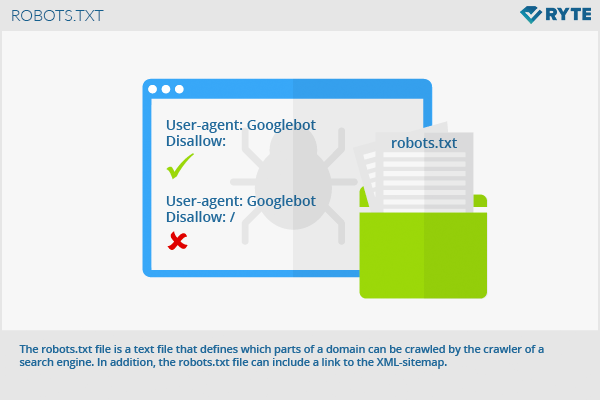

The most simple structure of a robots.txt is as follows:

User-agent: Googlebot Disallow:

This code allows the Googlebot to crawl all pages. The opposite of this, i.e. to prohibit webcrawlers to crawl the whole online presence, looks like this:

User-agent: Googlebot Disallow: /

In the line “User-agent“ the user registers the user agents for which the guidelines are valid. E.g. the following inputs could be made:

- Googlebot (Google search engine)

- Googlebot-Image (Google-image search)

- Adsbot-Google (Google AdWords)

- Slurp (Yahoo)

- bingbot (Bing)

If several user agents should be addressed, every bot gets an own line. An overview over all common commands and parameters for robots.txt. can be found on mindshape.de.

A link to a XML-Sitemap can be implemented as follows:

Sitemap: http://www.domain.de/sitemap.xml

Use Robots.txt with wildcards

The Robots Exclusion Protocol does not allow regular expressions (wildcards) in the strictest sense. But the big search engine operators support certain expressions like * and $. This means that regular expressions are usually used only with the Disallow directive to exclude files, directories, or websites.

- The character * serves as a placeholder for any string that follow this. The crawlers won't index websites that contain this string, provided they support the syntax of wildcards. For the user agent, this means that the directive applies to all crawlers - even without specifying a character string. An example:

User-agent: * Disallow: *autos

This directive would not index all websites containing the string "autos". This is often used for parameters such as session IDs (for example, with Disallow: *sid) or URL parameters (for example, with Disallow: /*?) to exclude so-called no crawl URLs.

- The character $ is used as a placeholder for a filter rule that takes effect at the end of a string. The crawler would not index content that ends on this character string. An example:

User-agent: * Disallow: *.autos$

With this directive, all content ending with. autos would be excluded from indexing. Similarly, this can be transferred to different file formats: For example, .pdf (with Disallow: /*. pdf$),. xls (with Disallow: /*. xls$) or other file formats such as images, program files or logfiles can be selected to prevent them from being indexed by search engines. Again, the directive refers to the behavior of all crawlers (user-agent: *) that support wildcards.

Example

# robots.txt for http://www.example.com/ User-agent: UniversalRobot/1.0 User-agent: my-robot Disallow: /sources/dtd/ User-agent: * Disallow: /nonsense/ Disallow: /temp/ Disallow: /newsticker.shtml

Relevance for SEO

The robots. txt of a page has a considerable influence on search engine optimization. With pages excluded by robots. txt, a website can not usually rank or appear with a placeholder text in the SERPs. Too much restriction of the user agents can therefore cause disadvantages in the ranking.

A notation of directives that is too open can result in pages that contain duplicate content or that affect sensitive areas such as a login. When creating the robots. txt file, accuracy according to the syntax is essential. The latter also applies to the use of wildcards, which is why a test in the Google Search Console makes sense. [2] However, it is important that commands in the robots. txt do not prevent an indexing. In this case, webmasters should use the Noindex Meta-Tag instead and exclude individual pages from indexing by specifying them in the header.

The robots. txt file is the most important way for webmasters to control the behavior of search engine crawlers. If errors occur here, web sites can become unavailable, because the URLs are not crawled at all and thus cannot appear in the index of the search engines. The question of which pages are to be indexed and which are not has an indirect impact on the way in which search engines view or even register websites. In principle, the correct use of a robots. txt does not have any positive or negative effects on the actual ranking of a website in the SERPs. Rather, it is used to control the work of the Googlebots and make optimal use of the Crawl Budget. The correct use of the file ensures that all important areas of the domain are crawled and therefore up-to-date content is indexed by Google.

robots. txt art

Some programmers and webmasters also use the robots. txt to hide amusing messages in it. However, this "art" has no impact on crawling or search engine optimization.

References

- ↑ Information about the robots. txt file support. google. com. Accessed on 11/06/2015

- ↑ The Ultimate Guide to Blocking Your Content in Search internetmarketingninjas.com. Accessed on 11/06/2015

Web Links