Crawler

A crawler is a computer program that automatically searches documents on the Web. Crawlers are primarily programmed for repetitive actions so that browsing is automated. Search engines use crawlers most frequently to browse the internet and build an index. Other crawlers search different types of information such as RSS feeds and email addresses. The term crawler comes from the first search engine on the Internet: the Web Crawler. Synonyms are also “Bot” or “Spider.” The most well known webcrawler is the Googlebot.

How does a crawler work?

In principle, a crawler is like a librarian. It looks for information on the Web, which it assigns to certain categories, and then indexes and catalogues it so that the crawled information is retrievable and can be evaluated.

The operations of these computer programs need to be established before a crawl is initiated. Every order is thus defined in advance. The crawler then executes these instructions automatically. An index is created with the results of the crawler, which can be accessed through output software.

The information a crawler will gather from the Web depends on the particular instructions.

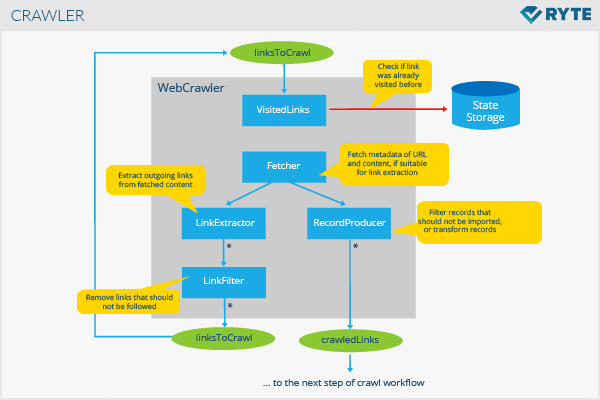

This graphic visualize the link relationships that are uncovered by a crawler:

Applications

The classic goal of a crawler is to create an index. Thus crawlers are the basis for the work of search engines. They first scour the Web for content and then make the results available to users. Focused crawlers, for example, focus on current, content-relevant websites when indexing.

Web crawlers are also used for other purposes:

- Price comparison portals search for information on specific products on the Web, so that prices or data can be compared accurately.

- In the area of data mining, a crawler may collect publicly available e-mail or postal addresses of companies.

- Web analysis tools use crawlers or spiders to collect data for page views, or incoming or outbound links.

- Crawlers serve to provide information hubs with data, for example, news sites.

Examples of a crawler

The most well known crawler is the Googlebot, and there are many additional examples as search engines generally use their own web crawlers. For example

- Bingbot

- Slurp Bot

- DuckDuckBot

- Baiduspider

- Yandex Bot

- Sogou Spider

- Exabot

- Alexa Crawler[1]

Crawler vs. Scraper

Unlike a scraper, a crawler only collects and prepares data. Scraping is, however, a black hat technique, which aims to copy data in the form of content from other sites to place it that way or a slightly modified form of it on one’s own website. While a crawler mostly deals with metadata that is not visible to the user at first glance, a scraper extracts tangible content.

Blocking a crawler

If you don’t want certain crawlers to browse your website, you can exclude their user agent using robots.txt. However, that cannot prevent content from being indexed by search engines. The noindex meta tag or the canonical tag serves better for this purpose.

Significance for search engine optimization

Webcrawlers like the Googlebot achieve their purpose of ranking websites in the SERP through crawling and indexing. They follow permanent links in the WWW and on websites. Per website, every crawler has a limited timeframe and budget available. Website owners can utilize the crawl budget of the Googlebot more effectively by optimizing the website structure such as the navigation. URLs deemed more important due to a high number of sessions and trustworthy incoming links are usually crawled more often. There are certain measures for controlling crawlers like the Googlebot such as the robots.txt, which can provide concrete instructions not to crawl certain areas of a website, and the XML sitemap. This is stored in the Google Search Console, and provides a clear overview of the structure of a website, making it clear which areas should be crawled and indexed.

References

- ↑ Web Crawlers www.keycdn.com. Accessed on May 28, 2019

Web Links